Usually, render farm cost is based on the total processing power measured in GHz for CPU and OB for GPU. Are they good pricing models?

When using a service, what are you interested in? For me, the two most important things are quality and price. Quality is always the first and most important factor. However, when the quality of service providers is similar, I will choose the one with more reasonable price. As for the render farm too, I also care a lot about the price besides the rendering speed. Honestly, when I first started using render farm, I didn’t understand what Ghz hour, OB hour was, or how did the render farm calculate my rendering costs.

Because I don’t understand how the price is calculated, I question the transparency of the render farm cost. Is there a hidden fee here, are they using any tricks to make the pricing plan look more appealing? So I spent some time learning about the pricing of many render farms.

Next, I will share what I know.

How does render farm cost work?

Each render farm has their own method to calculate the rendering costs. The methods maybe slightly different but they are all based on how much computing power the render farms are selling to users per hour. Usually, the rendering cost of a project is calculated based on the total processing power working on the job, measured in GHz for CPU and OB for GPU.

What is Ghz hour?

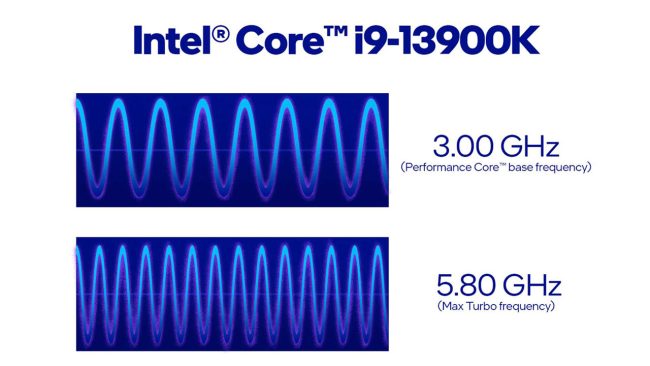

Gigahertz (Ghz) is the expression of clock speed (also called as “clock rate” or “frequency”) of the CPU. In other words, the clock speed measures the number of cycles the CPU executes per second, measured in GHz (gigahertz). A CPU with a clock speed of 3.2 GHz, for example, executes 3.2 billion cycles per second. (Older CPUs’ clock speed was measured in megahertz, or millions of cycles per second). A higher clock speed generally means a faster processor (CPU).

However, sometimes, multiple tasks are completed in a single clock cycle; in other cases, one task might be handled over multiple clock cycles. Since different CPU designs handle tasks differently, it’s best to compare clock speeds within the same CPU brand and generation. For example, a CPU with a higher clock speed from five years ago might be outperformed by a new CPU with a lower clock speed, as the newer architecture deals with tasks more efficiently.

So that’s Ghz. What about Ghz hour? It is the total computing power of a CPU running 1 hour. We take the number of cores times the clock speed of those cores. For instance, an Intel Xeon E5645 has 6 cores and a clock speed of 2.40 GHz. So this CPU running for 1 hour will produce: 6 (cores) x 2.4 (Ghz) x 1 hour = 14.4 Ghz per hour worth of computing power. If the price for Ghz hour is $0.015, and you use the server for 2 hours, you will pay:

14.4 x 0.015 x 2 = $0.432

The cost will double if the server has 2 identical CPUs, or multiply by the number of nodes if you use more than 1.

Summing up,

The cost of a project = the number of cores x the clock speed x the render time x price.

The core hour concept is similar to the Ghz hour. But you can eliminate the step of multiplying by the clock speed of the cores.

This pricing model of render farm is not really good one. But I will discuss it in the next section.

What is OB hour?

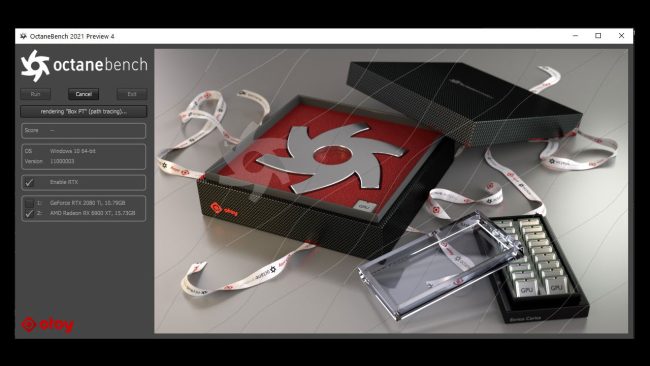

Octanebench (OB) is an application created by Otoy allowing you to benchmark your GPU using OctaneRender. It is currently the most popular GPU Rendering Benchmark tool to define the overall computing power of any combination of graphics cards in a computer. When Octanebench is run, it generates a combined “score” for the computer being benchmarked, which is indicated as Octanebench points. Because everybody uses the same version, the same scenes and settings, Octanebench points are used to compare between different systems.

So Octanebench hour (OB hour) is the computing power that a system of GPU(s) can produce per hour. A RTX 2080Ti has 355 Octanebench scores. If this GPU runs for 1 hour, it deliver 355 OB hour worth of computing power. If the price for OB hour is $0.01 and you use this server for 2 hours, you will pay:

355 x 0.01 x 2 = $7.1

The cost will multiply according to the number of GPUs in a node and the number of nodes you use. I take one more example. A server with 8 cards RTX 3090 has 5292 Octanebench points (a RTX 3090 has about 661 Octanebench points). If the price for OB hour is $0.01. I use 5 servers for 2 hours, I will pay:

5292 x 5 x 2 x 0.01 = $529.2

Summing up,

The cost of a project = Octanebench scores x the render time x price.

Are Ghz hour, OB hour good pricing models? Why render farm use them?

The Ghz hour and OB hour models of charging is widely used by the render farms around the world. Why is that? Online render farms usually use Ghz hour for CPU rendering because they have servers from several generations of hardware. With the hardware performance being uneven, they are trying to find a common denominator to build a pricing strategy.

(This is my personal opinion, please prove I am wrong. I wonder render farms break the price down into smaller units like Ghz hour or OB hour, to make it cheaper? So they can attract more users. Or they just want to confuse users like me hmmm).

I’ll talk about Ghz hour first. For CPU render farms that consist of varying core counts or varying clock speeds per node, Ghz hour model seems the most accurate and fair. Since almost all render farms use this model, it seems also a good way to compare render farms assuming all factors are equal.

Yes, assuming all factors are equal. This is where the problem arises. I read an old interesting blog from Renderstreet about this. I concluded from that article and my personal opinion as follows.

1. Not all cores are created equal.

I have talked above. Newer CPUs often outperform older ones in benchmark tests even when they have similar clock speeds. One of the reason is that the later gen processors are able to intelligently distribute workloads to multiple cores. So it’s only accurate to compare CPUs from the same brand and generation.

Within a render farm, its CPUs vary in brands and generations (perhaps due to the hardware update over time). Among different render farms, the difference is even bigger. I know many render farms keep the old processors, because they are stable and economical (but slow. The farms takes thousands of CPU to make up for it). Only a few render farms update the hardware from time to time.

2. A core’s definition varies from render farm to render farm.

Render farms usually define the core as we know what it is. But there is some cases we need to pay attention to. For example, some of the render farms consider one core with hyperthreading to be two separate cores. Some render farms uses the number of threads to calculate the Ghz hour. Moreover, Intel’s 12th Gen CPUs offers two different kinds of cores in one CPU package: E-Cores and P-Cores. Will the render farm take P-cores (the same cores we’ve known for years) or both to calculate the Ghz hour?

The OB hour model is also quite similar. You think you can compare prices between render farms based on OB hour? It’s not that simple.

3. GPUs vary within a render farm, and from farm to farm.

Similar to CPU, GPU performance is different among brands and generations. Newer GPUs with newer architecture delivers higher performance and power efficiency. Newer GPUs have more cores, more VRAM. VRAM is a quite important factor. Because in GPU rendering, the scene needs to fit in the VRAM. I can’t render if the scene requires more memory than VRAM of the GPUs. VRAM also does not add up when there are multiple GPUs in a system, unless using NVLINK for NVIDIA cards to pool the resources together. However, NVLINK is only applicable to certain GPU models.

In summary, what looks to be the better price isn’t always so. This render farm costs $100? It just takes 10 minutes to finish. That render farm only costs $10, but it renders in 1 days. A farm that appears to be more expensive but may provides more performance for the same cost. At that case, you get better value, i.e higher performance per dollar.

Another important thing I wanna talk is:

4. I don’t know the hardware I’m using.

Most of render farms tell the CPU or GPU of their servers. It can be the name of the CPU / GPU model, or the Cinebench / Octanebench scores of the nodes. A few render farms even don’t. In any way, I don’t know the hardware my project is rendering on. Is the hardware exactly what the render farm tells me? I can’t never get the answer for this questions in render farms with Ghz hour, OB hour concept, in other words, SaaS render farms.

Is there any better pricing model? Node hour?

Node hour represents the price for 1 hour of rendering on a node (CPU or GPU node). The math now is really easy.

The cost of a project = The render time x price (x the number of nodes, if you use more than 1).

However, the issues I talked above still remain the same in the node hour model of the SaaS render farms. The configuration of each node is different and I still don’t know which node I’m rendering on.

I think IaaS render farms have better model. This kind of farm uses node hour like some SaaS render farms. But IaaS render farms allow me to control the remote server. That’s why I can choose the exact configuration I want to work on. More importantly, I can check whether the hardware I’m using is really what the render farm says. Clear and transparent. I am even able to calculate the rendering cost in real time based on my usage time.

Final word to Render farm cost

All in all, Ghz hour and OB hour are two pricing units widely used by render farms all over the world. Some render farms also use core hour or node hour. However, these models under SaaS render farms are somewhat unclear and confusing. Because users are unable to calculate the cost during the render process and don’t know the exact hardware they are rendering on. The node hour model of IaaS, on the opposite, offers both – users know the hardware and calculate render farm cost in real time.

COMMENTS